Abstract

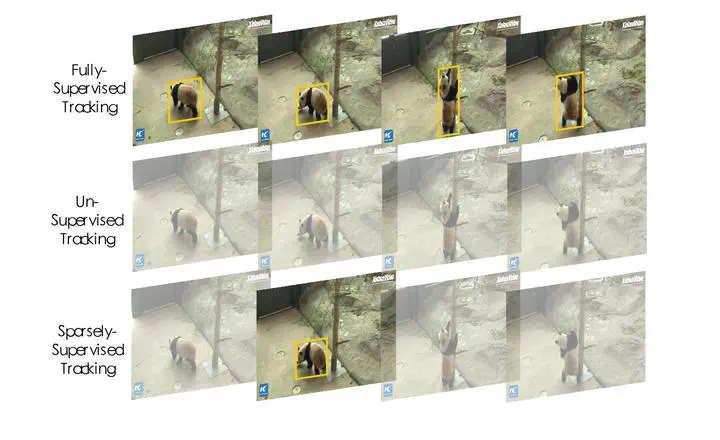

Recent years have witnessed the incredible performance boost of data-driven deep visual object trackers. Despite the success, these trackers require millions of sequential manual labels on videos for supervised training, implying the heavy burden of human annotating. This raises a crucial question: how to train a powerful tracker from abundant videos using limited manual annotations? In this paper, we challenge the conventional belief that frame-by-frame labeling is indispensable, and show that providing a small number of annotated bounding boxes in each video is sufficient for training a strong tracker. To facilitate that, we design a novel SParsely-supervised Object Tracking (SPOT) framework. It regards the sparsely annotated boxes as anchors and progressively explores in the temporal span to discover unlabeled target snapshots. Under the teacher-student paradigm, SPOT leverages the unique transitive consistency inherent in the tracking task as supervision, extracting knowledge from both anchor snapshots and unlabeled target snapshots. We also utilize several effective training strategies, i.e., IoU filtering, asymmetric augmentation, and temporal calibration to further improve the training robustness of SPOT. The experimental results demonstrate that, given less than 5 labels for each video, trackers trained via SPOT perform on par with their fully-supervised counterparts. Moreover, our SPOT exhibits two desirable properties: 1) SPOT enables us to fully exploit large-scale video datasets by efficiently allocating sparse labels to more videos even under a limited labeling budget; 2) when equipped with a target discovery module, SPOT can even learn from purely unlabeled videos for performance gain. We hope this work could inspire the community to rethink the current annotation principles and make a step towards practical label-efficient deep tracking.